In a recently published paper, Justin Flatt and his two co-authors proposed the creation of the Self-Citation Index, or s-index. The purpose of the s-index would be to measure how often a scientist cites their own work. This is desirable the authors believe because current incentive systems tend to encourage researchers to cite their own works excessively.

In other words, since the number of citations a researcher’s works receive enhances his/her reputation there is a temptation to add superfluous self-citations to articles. This boosts the author’s h-index – the author-level metric now widely used as a measure of researcher productivity.

Amongst other things, excessive self-citation gives those who engage in it an unfair advantage over more principled researchers, an advantage moreover that grows over time: a 2007 paper estimated that every self-citation increases the number of citations from others by about one after one year, and by about three after five years. This creates unjustified differences in researcher profiles.

Since women self-cite less frequently than men, they are put at a particular disadvantage. A 2006 paper found that men are between 50 and 70 per cent more likely than women to cite their own work.

In addition to unfairly enhancing less principled researchers’ reputation, say the paper’s authors, excessive self-citation is likely to have an impact on the scholarly record, since it has the effect of “diminishing the connectivity and usefulness of scientific communications, especially in the face of publication overload”?

None of this should surprise us. In an academic environment now saturated with what Lisa Mckenzie has called “metrics, scores and a false prestige”, Campbell’s Law inevitably comes into play. This states that “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.”

Or as Goodhart’s Law more succinctly puts it, “When a measure becomes a target, it ceases to be a good measure.”

However, academia’s obsession with metrics, measures, and monitoring is not going to go away anytime soon. Consequently, the challenge is to try and prevent or mitigate the inevitable gaming that takes place – which is what the s-index would attempt to do. In fact, there have been previous suggestions of ways to detect possible manipulation of the h-index – a 2011 paper, for instance, mooted a “q-index”.

It is also known that journals will try and game the Impact Factor. Editors may insist, for instance, that authors include superfluous citations to other papers in the same journal. This is a different type of self-citation and sometimes leads to journals being suspended from the Journal Citation Reports (JCR).

But we need to note that while the s-index is an interesting idea it would not be able to prevent self-citation. Nor would it distinguish between legitimate and non-legitimate self-citations. Rather, says Flatt, it would make excessive self-citation more transparent (some self-citing is, of course, both appropriate and necessary). This, he believes, would shame researchers into restraining inappropriate self-citing urges, and help the research community to develop norms of acceptable behaviour.

Openness and transparency

However, any plans to create and manage a researcher-led s-index face a practical challenge: much of the data that would be needed to do so are currently imprisoned behind paywalls – notably behind the paywalls of the Web of Science and Scopus.

This is a challenge already confronting Flatt. As he explains below, he is now looking to study in more detail how people self-cite at different stages of their careers and across various disciplines. But to do this kind of research he needs access to the big citation databases, which means finding partners with access. “With all the accessibility barriers currently in place it has really boiled down to connecting with researchers that have precious full access to databases like Scopus and Web of Science,” he says. “Otherwise, I am stuck.”

What we learn from this is that if the research community is to become the master of its own fate, and to put its house in order, open access to research papers can only be the first step in a larger open revolution. This truth has dawned on OA advocates somewhat slowly. First came the realisation that making papers freely available can be of limited value if the associated data is not also made open access. More recently, OA advocates have had to acknowledge that if the replication crisis now confronting science is to be adequately addressed, the entire research process will have to be opened up – a larger objective now generally referred to as open science.

The proposal for an s-index reminds us is that if science is to be maximised, and researchers are to be prevented from cheating, or behaving badly in various other ways, greater openness and transparency is vital, not just with regard to research outputs and the research process, but all the data generated in the research process too, including citation data.

It was in recognition of this last point that earlier this year The Initiative for Open Citations (I4OC) was launched. I4OC will help build on the work that the Open Citation Corpus (OCC) has been engaged in for a number of years.

Nevertheless, there is some way to go before these open citation initiatives will be in a position to provide the necessary raw material to allow projects like the s-index to prove successful. Until then any researcher-led attempts to study self-citation behaviours will be inhibited or will require paid access to paywalled products. “In the long-term, we need the Initiative for Open Citations (I4OC) to succeed with their goal of making citation data fully accessible,” says Flatt. “Right now, the data is locked away.”

In the meantime, of course, publishers may set about creating services like the s-index, and these would inevitably be paid-for services.

The larger issue here is that if the research community wants to free itself from the usurious fees that legacy publishers have long levied for the services they provide it will need to take a broader view, and think beyond the rhetoric of access to research. Right now we can see publishers adapting themselves to a world without paywalls by inserting themselves directly into the research workflow, and building new products based on data spawned by the many activities and interchanges that researchers engage in – products that some believe represent a new kind of enclosure. Publishers’ goal is quite clear: to continue controlling the scholarly communication infrastructure, but by other means than acquiring content in the shape of research papers.

The danger is that ten years down the road the research community could find that it has simply swapped today’s overpriced subscription paywalls for a series of equally expensive and interlocked products and services created from its own data, but which publishers are monetising. Consider, for instance, the way in which Elsevier has been buying up companies like Pure, Mendeley, Plum Analytics, SSRN and bepress, enhancing existing products like Scopus, and then linking these different products together in ways intended to lock universities into what will doubtless become extremely costly portfolios of conjoined services.

As Bilder et al pointed out in 2015 “Everything we have gained by opening content and data will be under threat if we allow the enclosure of scholarly infrastructures”.

Yet that is what we appear to be witnessing, and we can see this ongoing transformation in Elsevier’s changing self-characterisation. In 2010, it described itself as “the world’s leading publisher of science and health information”. Today it self-describes as “an information analytics company”.

For more information on the s-index please read the Q&A below with Justin Flatt, a research associate at the Institute of Molecular Life Sciences at the University of Zurich. His co-authors are Effy Vayena and Alessandro Blasimme, and their paper is called Improving the Measurement of Scientific Success by Reporting a Self-Citation Index.

The interview begins …

RP: You and your co-authors recently published a paper called Improving the Measurement of Scientific Success by Reporting a Self-Citation Index. In the paper, you make the crucial point that citations serve “to link together ideas, technologies, and advances in today’s packed and perplexing world of published science.” Most obviously this works at the paper level, but I guess citations can also provide a kind of timeline and map of a given research area. (A concept that the Self-Journals of Science seeks to make transparent by encouraging researchers to, in effect, curate citation lists for their own field – e.g. here), or as a tool for detecting emergent trends in science. So apart from the obvious assistance they provide to the readers of a paper, citations can offer benefits to the wider field of study, to the discipline at large, and indeed to scientific endeavour more generally.

In addition, however, citations have become one of the ways in which an individual researcher’s scientific productivity and impact is judged. To put it crudely, the more citations a researcher receives for their papers the higher their standing in their community. These two different roles that citations now play, however, are to some extent at odds with one another, since the second one encourages researchers to cite their own work excessively, thereby undermining the first one, as it has the effect of, as you put it, “diminishing the connectivity and usefulness of scientific communications, especially in the face of publication overload”? If nothing else, it introduces a great deal of distractive noise.

But can you say a little more about the problems created by excessive self-citation?

JF: To be clear, self-citations certainly serve a critical function in instances where they represent coordinated, sustained, productive research efforts. Along these lines, I’ve never quite understood why so many scientists are eager to exclude them from performance reports. The trick is to try and understand them. And we need to know what’s going on here, especially with respect to cases of abuse, given how much citations play a factor in deciding future careers in academia.

The problems that arise because of excessive self-citation exist on at least three main levels including that 1) it makes it harder to recognize and reward good science, 2) it diminishes the usefulness of manuscripts, and 3) it can jeopardize the very ideals of fairness that quantitative measures such as the h-index incarnate.

Consider the pressure excessive self-citation places on the early-stage researcher who can’t afford to go unnoticed, or women who appear to cite themselves far less than their male counterparts. At the very least, they will feel pressure to choose projects that will get picked up (i.e., cited) quickly by others over embarking on more risky endeavours, or worst-case scenario they may adopt excessive self-citing habits themselves.

After all, incorporating superfluous self-citations in one’s writing is easy, receives virtually no penalty, and can quickly increase visibility and recognition among colleagues, at least as captured by the wildly popular h-index.

RP: It might be helpful if you explained in more detail what in your view counts as inappropriate self-citation. I note, for instance, that a reviewer of your article pointed out that if a co-author writes a paper in which he cites a paper he wrote with you that, by your definition, would not be considered a self-citation. Are there definitional issues here?

JF: Inappropriate self-citations are those that exist for no other reason than to make a researcher’s work seem more popular and well-regarded. They do not enhance the informative nature of manuscripts, rather they serve to attract appreciation and cites from others.

You raise a key point with respect to defining it, and for this I think we should come together as a community to try and reach a consensus. In my mind, it makes sense to count self-cites for a given scientist when he or she is on the paper doing the citing because under these circumstances they have a direct say in what makes the reference list. But of course, one could see this thing from the paper angle and check for cumulative self-promotion by counting each instance where an author from the list cites the work compared to the total cites received from the surrounding community. This would clearly be a useful exercise.

Drawing boundaries

RP: As you said, self-citation can nevertheless serve a useful role, if only because researchers are always building on their previous work, and citations help to map the development of a research area. The issue, therefore, is how the research community draws the boundaries of acceptable and unacceptable self-citation, and this will doubtless differ from discipline to discipline. I am thinking that arriving at a consensus as to what is acceptable self-citation may not be possible?

JF: This is exactly what the self-citation index at its very core is all about, that is, it aims to make excessive self-citation behaviour more identifiable, explainable, and accountable. Towards this goal, I can’t believe we’re not already looking at the data.

It’s strange because almost everyone I speak with agrees that self-citing is perfectly acceptable as long as it fits within the norm of one’s respective field, and yet we have no clue what that looks like. The boundaries will remain hidden as long as we exclude the data from before our eyes. I think this can change if experts from the various disciplines have access to the data and will make time to weigh in on the matter. And as you point out, each disciplinary community will have its own standards of appropriateness with respect to the use of self-citations.

RP: So how would your proposed s-index work?

JF: It’s nothing more than the h-index turned in on itself so that we have before us the details regarding proportion and placement of self-citations from the total number of citations that a scientist has received over the course of his or her career.

We believe that it complements nicely the h-index, making it easier to score scientific outputs in terms of impact and success, and at the same time it should help promote good citation habits.

RP: On Twitter, someone posted a comment about your proposed s-index in which they said, “It’s a misleading metric unless it differentiates between legit & illegit self-citations. + it kind of favors self-plag over self-citation.” How would you respond to that?

JF: A self-citation index will not differentiate between legitimate and illegitimate citations. For this, there is no substitute for peer review. Rather the s-index seeks to help each disciplinary community set its own standards of appropriateness with respect to use of self-citations. When reported, excessive tendencies should be easier to spot and this will likely serve to deter bad behaviour.

Furthermore, with the data out in the open, we expect that authors, reviewers, and editors alike will give more thoughtful attention to how works are being referenced and this will enhance the informative character of manuscripts.

RP: So, norms would evolve over time. But can we be sure that this would prevent excessive self-citation?

JF: Scoring self-citation will send multiple signals, which will take some time to unpack, but we expect to see norms eventually emerge.

However, reporting an s-index will not be able to fully prevent excessive behaviour. There will always be those questionable cases, but it’s reasonable to think that scientists will be far less likely to boost their own scores while others are watching.

RP: There has in recent years been growing concern that journals are also guilty of excessive self-citation. For instance, editors sometimes insist that authors cite superfluous papers previously published in the journal in order to boost the journal’s impact factor. Would your proposed s-index capture this practice?

JF: The beauty of the s-index is that it can be applied in various ways. We could certainly think about using it to examine journal behaviour. It would be a matter of mapping self-cites to a journal rather than an author.

RP: I am wondering if a more worrying development is not what some called “citation cartels”, where groups of authors band together and cite each other superfluously for mutual benefit. Could your proposed s-index catch this behaviour?

JF: Again, I think it all goes back to how we define the initial parameters and of course the picture can get much more complicated.

This is interesting. I myself have wondered about something that sort of resembles a citation cartel but much more innocent, that is the case where you have a single professor who grows a large lab of scientists who eventually go on to lead labs of their own on different topics within the same field of research. Each of them will continue to publish, and they likely end up citing each other more than they do people from the outside community. I think the behaviour is unintentional but nevertheless it would be worthwhile to see how these cites really travel and the impact that they have on their respective fields.

Building on this thought, could it be the case that in some situations Europeans tend to cite Europeans more often than researchers from other continents, and vice versa? Perhaps as the s-index evolves, we start to visualize distinct networks but this seems a far away dream at the moment since the data on self-citation is not readily available.

RP: I guess when researchers superfluously cite their supervisors, other senior researchers, and friends, they are usually doing it out of deference, as a way of currying favour?

JF: It’s difficult to really know what motivates researchers to superfluously cite their supervisors, senior researchers, and friends. Perhaps one does it to gain favour, show respect, or help out.

I tend to think in instances where it’s a big problem, there’s a high chance that the more senior person/supervisor is the one that really has an issue. The power that a supervisor has over the career of an early-stage scientist is huge, especially if it’s a postdoc we’re talking about. Speaking out about it could damage a relationship that is critical for moving up the academic ladder. What’s bad is when the trainee goes on later to behave the same way out of a sense of urgency to remain competitive.

I think a key way to help prevent the problem is to teach more formally on how to cite properly during the training phase. Other than that, reviewers play a key role in ensuring citation quality, though I suspect that the job is not getting done sufficiently, based on my own experience and participation in the peer review process.

Finally, as bibliometrics will continue to be quite the force for the foreseeable future, a metrics-based solution like our s-index is essential.

RP: All the main citation databases include tools that allow self-citations to be removed. Some might argue that that is sufficient.

JF: First, excluding self-citations is not an appropriate action when self-cites are a result of continued, productive research efforts. Why would we ever want to throw out warranted self-citations from performance reports?

Second, it will do nothing to address the strong domino effect (i.e., cite yourself enough and others will follow).

Third, it does not promote good citation habits. This last part is critical to think about if we are to maintain strong connections between our ideas, technologies, and advances in the current overloaded publishing environment.

Gender

RP: You said earlier that there is a gender difference in the incidence of self-citation. Can you say something more about that?

JF: I really liked the study recently published by Molly King reporting that men tend to cite themselves significantly more than women. Its titled “Men set their own cites high: Gender and self-citation across fields and over time”. Here, it was found that men cite their own papers 56% more on average than women according to the analysis of 1.5 million papers published between 1779 and 2011.

The study, which considered articles from across disciplines in the digital library JSTOR, found that the rate has risen to 70% in the past two decades despite an increase of women in academia.

From my experience in talking with others, there are diverse opinions about exactly what the results mean and this is where I think the s-index will be very handy. It could help to level the playing field for women if excessive self-citing is truly as one-sided as it seems.

RP: A reviewer of your paper pointed out that excessive self-citation is hard to avoid if only because the pressure to publish is causing researchers to engage in “scientific salami slicing”. As I understand it, this is where one piece of research is divided up and published as multiple papers rather than as a single discrete report.

Does this not make excessive self-citation inevitable? Perhaps the larger problem is the production of too many unnecessary papers? In other words, the emphasis today is on quantity rather than quality, where it should be the other way around?

JF: I do tend to agree that we focus too much on delivering fast scientific outputs, and if we’re not careful, we somehow cut corners and cheapen the science. The flipside to this is that peer review can creep at a very slow place. It’s the mismatch between timing and expectations that keeps us anxious when it comes to publishing.

One interesting development is the creation of journals that aim to publish single observations rather than supporting the so-called story-telling format. This openly invites researchers to engage in “scientific salami slicing” and I get the idea behind doing it but also it comes with some major concerns.

For example, could a journal that does this become an outlet for amplifying citations? I can imagine they are looking into this very thing and asking questions like how many citations are appropriate for a single observation. Regardless, the s-index will help to discourage the type of strategic manipulation that could be facilitated by this publishing format.

RP: You stress in your paper that the value of the s-index would lie in its openness and transparency. Ironically, citation data is not generally freely available today. There is the Initiative for Open Citations (I4OC), but this has some way to go to match subscription services like Web of Knowledge and Scopus. As such, most of the raw material needed to create the s-index is behind a paywall today. To what degree is this an obstacle to your plans?

JF: The goal behind releasing the initial paper calling for a self-citation index was to raise awareness and to initiate discussion in a more formal format. Now I’ve started a collaboration to demonstrate the usefulness of the s-index and hopefully a larger more comprehensive study is out later this year.

With all the accessibility barriers currently in place it has really boiled down to connecting with researchers that have precious full access to databases like Scopus and Web of Science. Otherwise, I am stuck.

In the long-term, we need the Initiative for Open Citations (I4OC) to succeed with their goal of making citation data fully accessible. Right now, the data is locked away but the I4OC will push until we have it ready to be analysed in machine-readable fashion.

Tail eating?

RP: We have a plethora of metrics for measuring research and research performance today, including the impact factor and the h-index. The h-index was essentially a response to the problems of the impact factor. Your s-index is to some extent a response to the h-index. Then we have things like the r-index. Does measuring and monitoring science really have to be as complicated as this? Is it not a case of the snake eating its own tail? And does not every new metric simply introduce new problems?

JF: Like you, I am a bit weary of metrics. I think it’s impossible to measure the productivity and impact of a scientist using only a few numbers. For example, how do you score creativity, fairness, availability to colleagues, and project management skills?

Regardless of how we feel about these things, we must come to grips with the system that we are working in, and at the moment the h-index has won out as the metric that gets to say the most about our impact and productivity.

All things considered, I think the s-index can help the h-index do its job. I will even go as far as to say that it won’t introduce new problems. We are simply trying to be open with respect to showing self-citation data.

RP: As part of the paper you include a very small study (I believe 4 people). You mention you have a large follow-up study planned. Can you say something more about that?

JF: Again, with the first paper the goal was to initiate conversation. I would hesitate to call the report in any way a data-driven study, given the small sample size. Now I’ve started working with a data scientist who is very interested in exploring the usefulness of the s-index, and importantly, he has full access to a few of the big databases.

The study is just getting off the ground but we aim to look into how people self-cite at different stages in their careers and across various disciplines. This is the kind of stuff that needs to be done so that we have a shot at more easily identifying excessive behaviour.

RP: If successfully introduced, how do you think the s-index would evolve?

JF: I think implementation of the s-index will be pretty straightforward. What will change in time is the way that we view and handle citation data. Hopefully, the s-index ultimately provides an important contribution to the fair and objective assessment of scientific impact and productivity.

RP: This is not in your area of research so someone would need to get funding and run with the idea I guess?

JF: Yes, it will take the help of others to push the index forward. Luckily, I have found an expert in the area, who is happy to take on the project and has the funding and students to see it through. I am just happy that I can continue to be a part of the work.

RP: Thanks for agreeing to answer my questions. I look forward to seeing the results of your follow-up study.

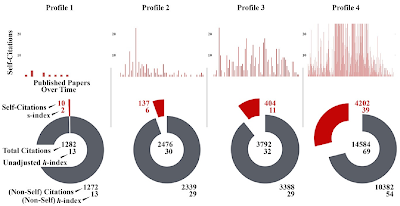

Figure 1. Transparency in citation reporting. Self-citation data (red) is shown for four biomedical

researchers based on their papers in PubMed. The top row is a manuscript level view of how each

individual cites themselves over time. Each red bar represents a single paper that has been self-cited

to some degree. Note that the cutoff for clarity here is just beyond 20 citations. It was chosen to

provide a sense of how each researcher distributes self-citations over his or her published papers.

The bottom row reveals the proportion of total citations that are self-cites

1 comment:

Reading this made me feel dirty. It's the wrong solution. Shaming researchers for behavior the author doesn't like is a louse thing to do. Self-citations are perfectly reasonable; how much one should cite oneself versus others depends upon whose work one builds. Esoteric academics pursuing rabbit holes have no choice but to self-cite.

Rewarding self-citations seems to be the problem. Removing them from h-index calculations seems like a sensible fix. Punishing and shaming? That will build far worse skewed incentives than the current system.

Post a Comment