We are today seeing growing

dissatisfaction with the pay-to-publish model for open access. As this requires

authors (or their funders or institutions) to pay an article-processing charge

every time they publish a paper it is felt to be discriminatory, especially for

non-funded researchers and those based in the Global South (see, for instance, here, here and here).

|

| PLOS' Sara Rouhi |

As a result, various

alternative approaches are emerging intended to move away from APCs, including

crowdfunding and membership schemes. Here institutions are asked to commit to

paying an annual fee to a publisher, with the aim of pooling sufficient funds

to cover the costs of making all the papers in a journal open access.

One of the more

successful implementations of this model is the Open Library of Humanities (OLH), which has operated what it calls its Library Partnership Subsidies scheme since 2015.

Clearly, if the costs

of the annual fee are to be viewed as reasonable by those asked to take part a

sufficient number of institutions need to sign up.

The inherent weakness

of the model is that a small group of community-minded institutions could end

up paying all a publisher’s costs and everyone else would be able to “free

ride”. In effect, those who join a membership scheme could end up shouldering

the costs for everyone to have free access.

A key question is how

many universities are willing to join an OA membership scheme. OLH co-founder

Martin Eve has estimated that there are only

around 300 libraries who will do so.

Nevertheless, we

should not doubt that there is a real wish to move beyond APCs and many have

come to believe that membership schemes are the best way of doing this. To be successful,

however, they will need to broaden the pool of those willing to take part.

Annual Flat Fee

With this aim in

mind, in October the Public Library of Science (PLOS) launched what it calls its

Community Action Publishing (CAP) initiative for its

two selective journals PLOS Biology and PLOS Medicine. The hope

is that the journals can be gradually moved away from having to charge APCs and

that the publishing costs can be shared as widely as possible.

Specifically, PLOS is

inviting universities to pay an annual flat fee that will give their faculty

unlimited publishing opportunities in the journals (there are separate

“communities” for each journal) without the need to pay an APC each time. However,

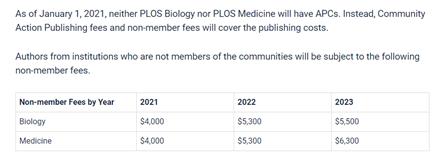

authors of institutions who do not join the scheme will be charged a non-member fee (NMF) that will increase in price over time, as below.

The rising cost of

the NMF is intended to encourage universities who have not joined to do so in order to avoid

their faculty having to pay to publish.

PLOS hopes that the annual

fees will be low enough to attract a sufficient number of institutions but that

the pooled funds will eventually be adequate to meet all the costs of publishing the

journals. In the interim, the NMF means that there will be two separate revenue streams coming in and

the free riding issue will be avoided.

The hope is that the NMF can be discontinued when the pilot period ends (it lasts from 2021 until 2023). However,

says PLOS Director of Strategic Partnerships Sara Rouhi, that will be dependent

on all the large institutions that PLOS is targeting joining the scheme. “If we

don’t have a stable membership by then, we might have to offset lack of participation

with more NMFs”, she told me.

Tiers

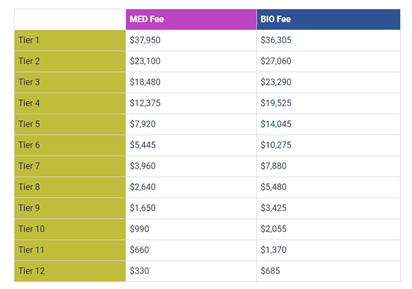

The annual fee institutions

will be asked to pay will be calculated by looking at their faculty’s publishing

activity between 2014 and Q3 2019. Based on that they will then be assigned to

a fee tier, as below.

To help broaden the

pool, the annual fee will be calculated on the publishing activity not just of the

institution’s corresponding authors, but of their contributing authors too. The

aim is to ensure that “the cost of publishing is distributed more equitably

among representative institutions.”

In other words, PLOS will

calculate how often during the historical publishing activity period an

institution was associated with both corresponding and contributing authors and then assign a weighting to each type of author. Specifically, contributing-author

articles will be weighted at half that of corresponding authors.

As the PLOS FAQ explains: “Publishing

activity is counted by determining the number of times an institution was

associated with a corresponding author and the number of times an

institution was associated with contributing authors. Papers where the

institution is affiliated with the corresponding authors are weighted as 1

article and papers where the institution is affiliated with the contributing

author are weighted as ½ article. (Multiple contributing authors from the same

institutions are counted only once).”

Universities whose

faculty have never published in the journals can also opt to pay the tier’s lowest

fee in order to “insure” themselves against the possibility that one or more of

their faculty might publish in the journal within the time period of the scheme

(Jan 1st 2021 – Dec 31st

2023).

In addition, says

PLOS, Research4Life countries are automatically members of each community so

researchers in those countries will never be subject to fees. And authors unable to

pay non-member fees can apply for waivers as per the standard fee-waiver

mechanisms offered by PLOS.

To signal its own

commitment to the community PLOS has set targets for each journal and will cap

its margin at 10%. Revenue exceeding the community targets will go back to

members at renewal.

Time will tell how successful

the scheme will be in broadening the pool beyond the 300 libraries who normally

join OA membership schemes. Certainly, the challenge will be that much greater

given that it is being launched at a time when many libraries are signing

expensive transformative agreements with legacy publishers and universities are facing the financial impact of the pandemic. Some have also suggested that, as

selective journals, PLOS Biology and PLOS Medicine do not publish

a sufficient number of papers to make it worthwhile for many institutions to

participate.

My initial thought

was that PLOS will be targeting three separate types of institution (corresponding-author

institutions, contributing-author institutions, and “insurance” institutions).

Sara Rouhi suggests that this is not the way to view it. Below is an exchange I

had with her.

Levers

RP: I understand the

PLOS Community Action initiative will target 3 types of institution:

1.

Corresponding-author institutions.

2.

Contributing-author institutions.

3.

Insurance

institutions (those institutions whose researchers don’t currently publish with

the PLOS journals but who might want to insure against the possibility that one

of their researchers will do in the future).

I guess there will be

some overlap between 1 and 2 but I assume you will have done some calculations

on the numbers of potential institutions for each category. If so, can you

share them with me?

SR: While we initially

grouped institutions this way, libraries and consortia consistently fed back to

us that this was more complicated/confusing than it needed to be. So rather

than think of different types of “institutions,” it’s easier to think of

different publishing activity “types” – publishing as a lead author, publishing

as a contributing author, or publishing with affiliations in both designations.

Given the selectivity

and niche of these journals, some research-intensive universities publish

little to not-at-all, and smaller institutions publish more frequently as lead

authors. Simplifying the participation criterion to just

publishing activity elides the traditional distinction of “research intensive”

vs. “teaching” institutions etc.

I don’t want to add

further confusion by grouping institutions as you’ve indicated. We purposely

eliminated this distinction for clarity.

RP: Ok. I ask this

question in the context of Martin Eve’s estimate that there are only

300 libraries that will support OA membership schemes. (I should add that

he said this in the context of a new initiative from COPIM that aims [like PLOS] to “broaden the pool” of

universities willing to join a membership scheme).

SR: As you say, Martin

Eve identified about 300 libraries that will support OA membership schemes.

There’s some nuance worth flagging, however.

The TL;DR is that

Martin’s statement is true if you assume institutions that are participating

for largely altruistic reasons – because it’s the “right thing to do.” The

PLOS CAP model couldn’t be predicated on that as a buying motivation. So it

helps to go back to “collective action” basics. Credit to Dr. Kamran Naim for

elucidating this at the Basel Sustainable Publishing Forum last week.

For collective action

schemes to work there are generally two levers you can pull:

1.

Encourage “pro-collective” behaviour by leveraging group affinity and

social incentives

2.

Appeal to economic self-interest (aka “private benefit”)

From my perspective, SCOAP 3 and OLH lean heavily

on the first lever. Participating in them is the “right” thing to do so

libraries support them.

The challenge with

only pulling on this lever is sustainability over time. SCOAP3 relies heavily

on CERN’s ongoing support and has the challenge of large beneficiaries like

Russia, Brazil and India not participating while benefitting from the open content.

OLH memberships are very

small financial commitments that maintain OLH but make growth of the program difficult.

Martin talked about this on a webinar we did for UKSG earlier in the year.

A collective model

that focuses primarily on the second lever is Subscribe2Open. The primary benefit

is getting the community to agree to make content open in exchange for a

discount. If all members do not opt-in, the content stays behind a paywall. The

combination of a private benefit (the discount) with the public good (making

the content open) is a strong motivator for participation and a big part of why

the model is so successful.

PLOS Community Action

Publishing attempts to pull both levers. The pro-collective/group

affinity/social incentives relates to the moral imperative – especially in a

time of pandemic – to make biomedical research open to read and open to

publish. Jeff Kosokoff’s comment that “Open

Access is social justice,” speaks to this. Indeed, we have many

commitments based on this mission-aligned priority for libraries. However

what has interested partners from mere interest to actual commitment is the

equitable fee structure (and relative affordability given the current budget

crisis).

That said, without

including appeals to economic self-interest, the PLOS CAP model would suffer

from the same sustainability issues as other collectives. Hence our

implementation of a secondary, “back up” revenue stream – non-member fees (NMFs)

for authors from non-CAP members.

These NFMs are not

meant to penalize authors but rather to encourage libraries to join rather than

expose their authors to fees. For many institutions, the NMFs per article will

be higher than the annual fee the library would pay to join one or both

collectives.

Benefits

The NMFs help offset

slow uptake of institutions who are not able to find the funds to support a

model like this immediately. Thanks to those fees coming in, libraries can take

more time to join and we can offer flexibility for institutions that need to

leave the collective.

There are several

benefits to this:

Enlarging the pool of

institutions that would participate in this collective gives us the flexibility

to create a much finer tuned fee structure with a long tail of low dollar fee

tiers to accommodate institutions that do not publish frequently in either

journal.

Those low fee tiers

allow institutions strapped for funds and/or infrequent publishing institutions

to participate because they support the moral imperative of equity and

inclusivity that the model promotes. It simply doesn’t cost them that much to

“do the right thing.”

Research intensive

institutions then derive benefit from the “research light” institutions

participating since they offset some of the cost burden. More institutions of

varying publishing intensity means lower fees for everyone.

So, Martin is right

if you’re counting only relatively wealthy institutions that want to do the

“right thing.” If you start adding other considerations, especially private

benefits (aka, we don’t want our authors to see non-member fees) and

recognition of contributing author affiliations, you get a much larger pool and

set of incentives to motivate ongoing participation.

Targets

As for our target

institutions:

For PLOS Medicine

there are 909 total institutions with some publishing history (some combination

of lead and co-author affiliations) in the time period we evaluated.

44 of those are in

Tiers 1-4

80 are in Tiers 5-7

The remainder are in

Tiers 8-12

For PLOS Biology

there are 1,557 total institutions with some publishing history (some combination

of lead and co-author affiliations) in the time period we evaluated.

45 are in Tiers 1-5

152 are in Tiers 6-8

The remainder are in

Tiers 9-12

As I say, I wouldn’t

necessarily correlate rough groupings of similar institutions as “corresponding

author institutions, contributing author institutions, and insurance

institutions” especially since it does not include the thousands of

organisations with no publishing history who might want to participate.

However, it’s

probably fair to say that the highest tiers have institutions that published a

lot in both designations. The middle tiers were a combination but at lower

volume, and the lowest tiers were low volume and mostly in the contributing

author designation.

Threat

RP: In a webinar you gave in

September you said that if transformative agreements flourish there probably

won’t be sufficient money left in library budgets to support schemes like PLOS

Community Action. How big a threat do you think there is here?

SR: This, of course, comes

down to how you define “transformative agreements.” If you mean the standard

RAP/PAR deals that integrate historic subscriptions with open access publishing

that stays revenue neutral, then yes. If these are negotiated with large

commercial publishers first, we’re looking at new kind of “big deal” that locks

in library monies with subscription publishers of hybrid open-access journals.

Non-profits, small societies, and native-OA publishers may very well not make

it out the other side of this transition (especially if “read” institutions’

subscription monies exit the system).

It’s hard to know how

big the threat is as it ultimately comes down to choices libraries make. Many

appear to be balancing both imperatives – looking at where they publish and

spend the most and evaluating how mission aligned those outlets are.

It’s not easy.

RP: So, what are your

expectations about the choices that libraries will make with regard to the PLOS

Collective Action Publishing scheme?

SR: It’s totally

dependent on region, funding structures (block grants in UK versus how the US

does it) and how radical libraries want to be about cancelation and re-negotiations.

I have personally

been overwhelmed by the number of cash strapped organisations that are pushing

to support this model and find the money.

The December

commitment update will tell the tale!

RP: Thank you. And

good luck!